Introduction: The Digital Transformation Imperative

In an era where digital interactions generate unprecedented volumes of data every second, organizations face mounting pressure to process, protect, and leverage information responsibly. The convergence of artificial intelligence with data processing technologies has created a new paradigm for managing digital content, ensuring compliance, and protecting sensitive information. From automated content moderation systems that safeguard online communities to sophisticated AI agents that navigate complex compliance frameworks, these technologies are reshaping how businesses operate in the digital age.

The rapid acceleration of digital transformation, particularly following global shifts toward remote work and digital-first business models, has amplified the importance of automated data processing solutions. Organizations now handle exponentially more unstructured data than ever before, including user-generated content, legal documents, communications, and multimedia files. This explosion of data brings both opportunities and challenges: while businesses can extract valuable insights and improve operations, they must also navigate increasingly complex regulatory landscapes and protect sensitive information from exposure.

The Foundation: Content Moderation in the Digital Age

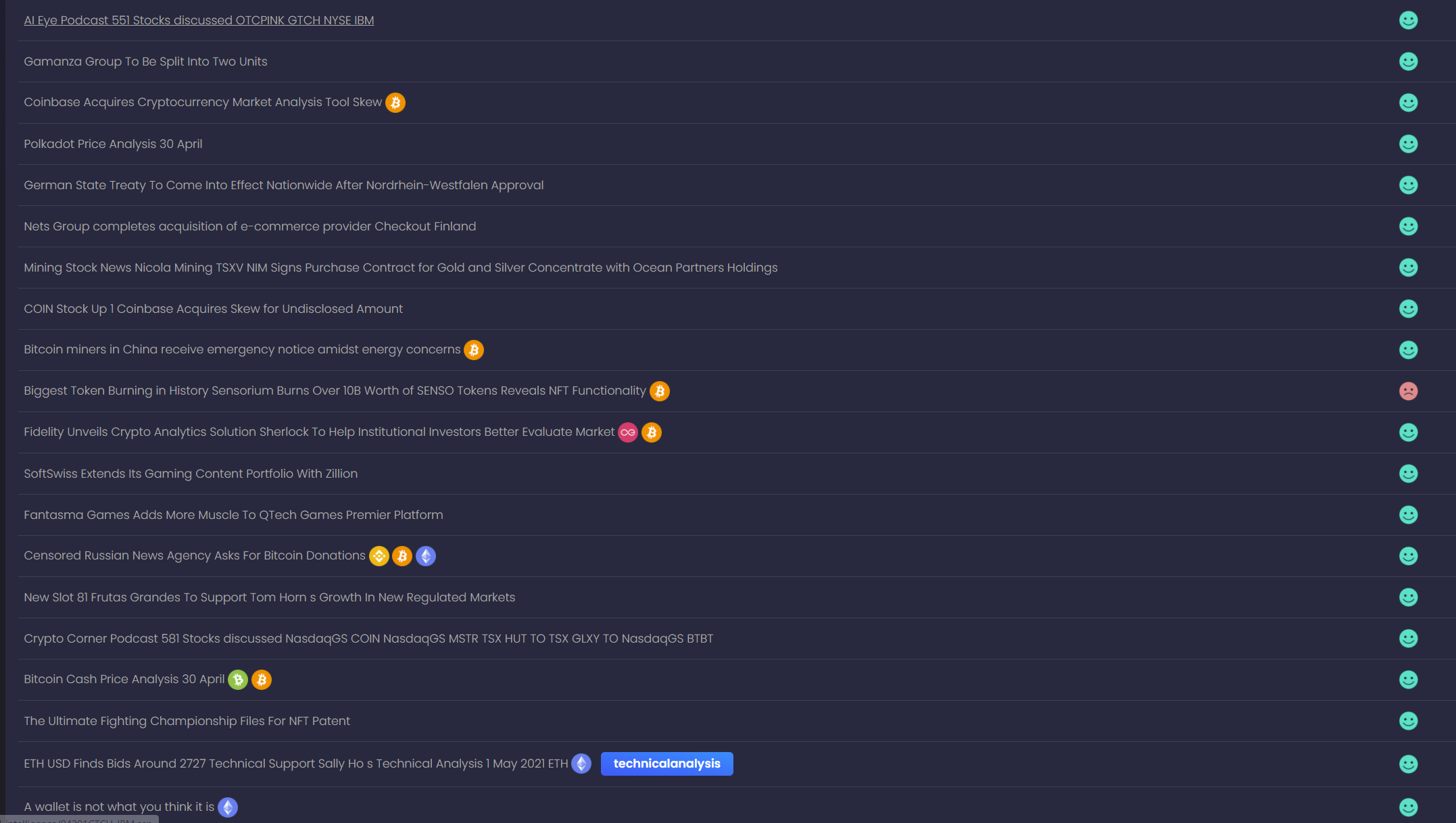

Content moderation has evolved from simple keyword filtering to sophisticated AI-powered systems capable of understanding context, intent, and nuance. Modern text moderation API solutions employ advanced natural language processing to identify harmful content across multiple languages and cultural contexts. These systems go beyond detecting explicit violations to understand subtle forms of harassment, misinformation, and manipulative content that might escape traditional filters.

The scale of content moderation challenges is staggering. Social media platforms process billions of posts daily, e-commerce sites manage millions of product listings and reviews, and educational platforms must ensure safe learning environments for students of all ages. According to research from Stanford’s Internet Observatory, automated content moderation systems now handle over 95% of initial content screening on major platforms, with human moderators focusing on edge cases and policy development.

The sophistication of modern content moderation extends to multimodal analysis, where systems simultaneously evaluate text, images, audio, and video content. These integrated approaches are essential for identifying coordinated manipulation campaigns, detecting deepfakes, and preventing the spread of harmful content that combines multiple media types. Organizations implementing comprehensive automated content filtering solutions report significant improvements in user safety metrics while reducing the psychological burden on human moderators who would otherwise be exposed to disturbing content.

Privacy-First Architecture: Redaction and Anonymization Technologies

As data privacy regulations proliferate globally, with frameworks like GDPR, CCPA, and emerging national privacy laws, organizations must implement robust systems for protecting personally identifiable information (PII). Modern data redaction services utilize machine learning algorithms trained on diverse document types to identify and remove sensitive information automatically. These systems recognize not just obvious identifiers like social security numbers and credit card information, but also quasi-identifiers that could be used in combination to re-identify individuals.

The technical complexity of effective redaction goes beyond simple pattern matching. Context-aware redaction systems must understand document structure, maintain semantic meaning while removing identifying information, and ensure that redacted documents remain useful for their intended purposes. Research from MIT’s Computer Science and Artificial Intelligence Laboratory demonstrates that advanced redaction techniques can preserve up to 90% of document utility while meeting stringent privacy requirements.

Complementing redaction technologies, live anonymization systems provide real-time privacy protection for streaming data and interactive applications. These solutions are particularly crucial in healthcare, where researchers need access to medical data for studies while maintaining patient privacy, and in financial services, where transaction data must be analyzed for fraud detection without exposing customer information. The implementation of differential privacy techniques, as outlined in research from Harvard’s Privacy Tools Project, ensures that anonymized datasets maintain statistical validity while preventing individual re-identification.

Intelligent Document Processing: AI in Legal and Contract Review

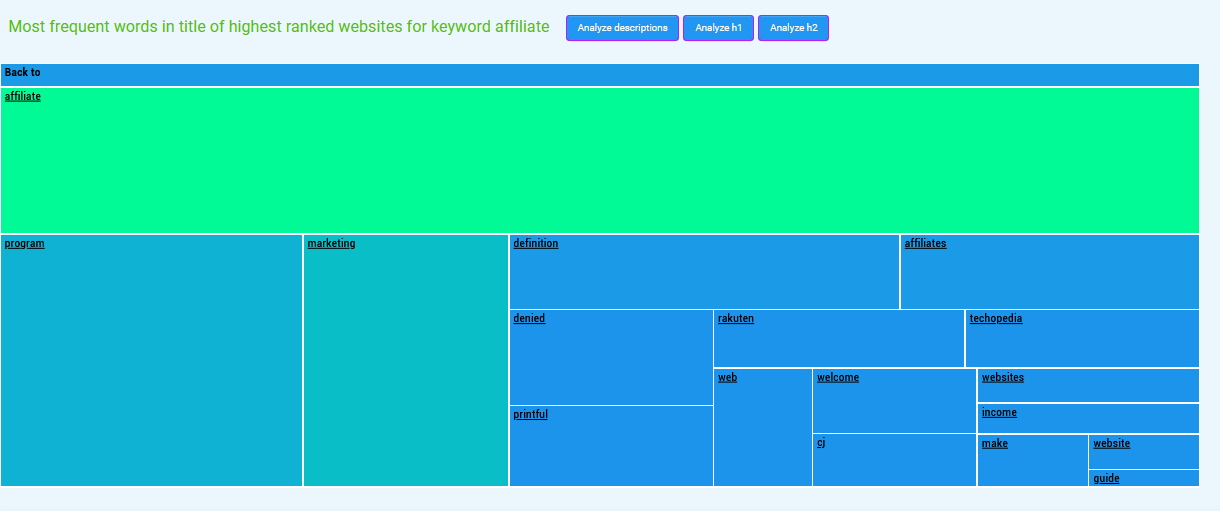

The legal industry’s digital transformation has been accelerated by AI-powered document analysis tools that can process contracts, agreements, and legal documents with unprecedented speed and accuracy. Modern automated contract review tools leverage natural language understanding to identify key clauses, flag potential risks, and ensure compliance with organizational policies and regulatory requirements.

These systems employ sophisticated techniques including named entity recognition, relationship extraction, and semantic analysis to understand complex legal language and identify obligations, rights, and potential liabilities within contracts. The technology has evolved from simple clause detection to understanding the interplay between different contract sections and identifying conflicts or ambiguities that might pose risks. According to research published in the Journal of Artificial Intelligence Research, AI-powered contract analysis can reduce review time by up to 80% while improving accuracy in identifying non-standard terms.

The impact extends beyond efficiency gains. By standardizing contract review processes and maintaining comprehensive audit trails, these tools help organizations ensure consistency in their legal operations and demonstrate compliance with regulatory requirements. Industries with high contract volumes, such as real estate, insurance, and procurement, have particularly benefited from the ability to process thousands of documents while maintaining quality control standards that would be impossible to achieve through manual review alone.

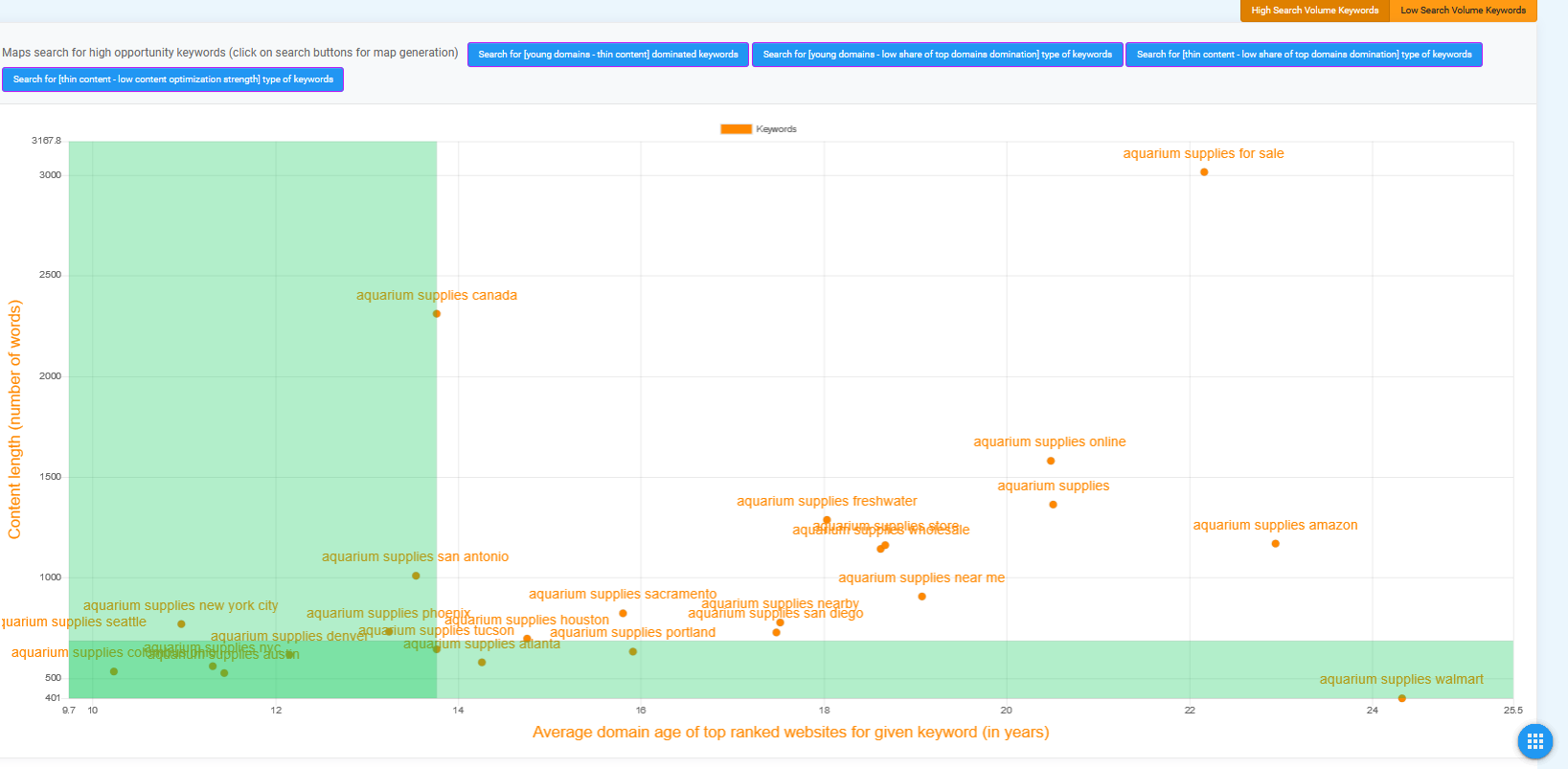

Web Intelligence: URL Categorization and Threat Detection

In the cybersecurity landscape, the ability to quickly classify and assess web resources has become critical for protecting organizations from online threats. Advanced web categorization databases employ machine learning algorithms that analyze multiple signals including content, structure, behavior, and reputation to classify websites in real-time. These systems protect users from phishing attempts, malware distribution sites, and other malicious content while enabling organizations to enforce acceptable use policies.

The sophistication of modern URL categorization goes beyond simple blacklisting. Dynamic analysis systems evaluate websites in isolated environments to detect malicious behavior, while machine learning models identify previously unknown threats based on patterns learned from millions of analyzed sites. Research from Carnegie Mellon’s CyLab shows that modern URL categorization systems can detect zero-day phishing sites with over 95% accuracy within minutes of their creation.

These systems also play a crucial role in brand protection, identifying unauthorized use of trademarks, counterfeit product sites, and brand impersonation attempts. By maintaining comprehensive databases of URL classifications and continuously updating their models based on emerging threats, these platforms provide essential infrastructure for web security across industries.

The Next Frontier: Agentic AI Systems

The emergence of agentic AI represents a paradigm shift from reactive to proactive artificial intelligence systems. Modern enterprise agentic AI platforms enable organizations to deploy autonomous agents capable of complex reasoning, multi-step planning, and independent decision-making within defined parameters. These systems combine large language models with specialized tools and APIs to perform tasks that previously required human intervention.

Agentic AI systems are transforming business processes across industries. In customer service, AI agents handle complex inquiries by accessing multiple knowledge bases, executing transactions, and escalating issues when necessary. In software development, code generation agents not only write code but also test, debug, and optimize their outputs iteratively. Research from Berkeley’s Center for Human-Compatible AI highlights the importance of building agentic systems with robust safety measures and alignment mechanisms to ensure they operate within intended boundaries.

The architectural complexity of agentic systems requires sophisticated orchestration layers that manage agent interactions, resource allocation, and failure recovery. These platforms must balance autonomy with control, enabling agents to operate independently while maintaining oversight and intervention capabilities. Organizations implementing agentic AI report significant improvements in operational efficiency, with some processes seeing 10x productivity gains while maintaining or improving quality metrics.

Governance and Compliance: The Critical Framework

As AI systems become more autonomous and influential in decision-making processes, ensuring compliance with regulatory requirements and ethical standards has become paramount. Specialized AI compliance management systems provide frameworks for monitoring, auditing, and controlling AI agent behavior to ensure adherence to organizational policies and regulatory requirements.

These compliance platforms address multiple challenges simultaneously: ensuring AI decisions are explainable and auditable, preventing bias and discrimination in automated decisions, maintaining data privacy and security, and demonstrating regulatory compliance across jurisdictions. The implementation of comprehensive logging and monitoring systems enables organizations to track every decision made by AI agents, understand the reasoning behind those decisions, and intervene when necessary.

The regulatory landscape for AI continues to evolve rapidly, with frameworks like the EU’s AI Act and various national AI strategies establishing requirements for transparency, accountability, and human oversight. Organizations must implement robust governance structures that can adapt to changing regulations while maintaining operational efficiency. This includes establishing clear chains of responsibility for AI decisions, implementing regular audits and assessments, and maintaining documentation that demonstrates compliance efforts.

Integration Challenges and Solutions

Implementing these advanced AI and data processing technologies requires careful consideration of integration challenges. Organizations must navigate technical complexity, ensure interoperability between systems, and maintain performance at scale. Successful implementations typically follow a phased approach, starting with pilot projects that demonstrate value before expanding to enterprise-wide deployments.

Key integration considerations include API design and management, data pipeline architecture, and security infrastructure. Modern platforms increasingly adopt microservices architectures that enable flexible deployment and scaling while maintaining system reliability. The use of containerization technologies and orchestration platforms has simplified deployment across diverse infrastructure environments, from on-premises data centers to multi-cloud configurations.

Organizations must also address the human factors in technology adoption. This includes training staff to work effectively with AI systems, establishing new workflows that leverage automation capabilities, and managing organizational change as roles and responsibilities evolve. Successful implementations invest significantly in change management and continuous training to ensure that human workers can effectively collaborate with AI systems.

Future Directions and Emerging Trends

The convergence of AI technologies with data processing capabilities continues to accelerate, driven by advances in model architectures, computing infrastructure, and algorithmic efficiency. Emerging trends include the development of multimodal AI systems that can process and generate content across text, image, audio, and video modalities simultaneously. These capabilities enable more sophisticated content moderation, richer document understanding, and more natural human-AI interactions.

The democratization of AI technologies through low-code and no-code platforms is enabling organizations without extensive technical resources to implement sophisticated data processing solutions. This trend is particularly important for small and medium-sized enterprises that need to compete with larger organizations in terms of operational efficiency and customer experience.

Looking ahead, the integration of quantum computing with AI systems promises to unlock new capabilities in optimization, pattern recognition, and cryptographic applications. While practical quantum advantage for AI applications remains several years away, organizations are already exploring hybrid classical-quantum algorithms for specific use cases.

Conclusion: Building Responsible AI Ecosystems

The technologies discussed in this article represent critical infrastructure for the digital economy. From content moderation that maintains safe online spaces to agentic AI systems that automate complex business processes, these tools are reshaping how organizations operate and compete. However, with great power comes great responsibility, and organizations must carefully consider the ethical, legal, and social implications of their AI deployments.

Success in implementing these technologies requires a holistic approach that balances technical capabilities with governance frameworks, combines automation with human oversight, and prioritizes both efficiency and responsibility. Organizations that successfully navigate this balance will be best positioned to leverage AI’s transformative potential while maintaining stakeholder trust and regulatory compliance.

As we move forward, the continued evolution of AI and data processing technologies will create new opportunities and challenges. Organizations must remain agile, continuously updating their strategies and systems to adapt to technological advances and changing requirements. By building on the foundation of robust content moderation, privacy protection, intelligent document processing, and compliant AI systems, businesses can create sustainable competitive advantages while contributing to a safer, more efficient digital ecosystem.

The journey toward comprehensive AI-powered data processing is not a destination but an ongoing evolution. Organizations that embrace this journey, investing in both technology and governance, will be best positioned to thrive in an increasingly digital and AI-driven future. The key lies not just in adopting these technologies, but in implementing them thoughtfully, responsibly, and with a clear vision of their role in creating value for all stakeholders.